Opti-Acoustic Semantic SLAM with Unknown Objects in Underwater Environments

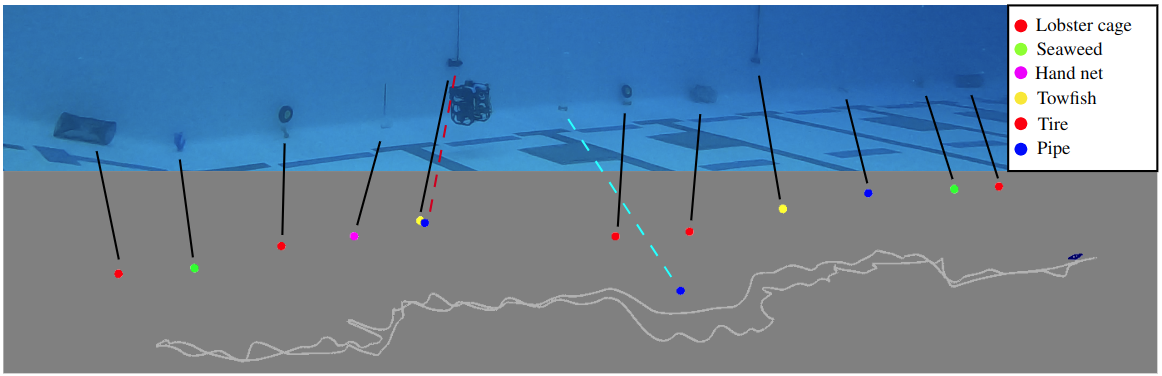

The upper portion of the image illustrates an underwater vehicle navigating through an underwater environment where various objects are placed.

The trajectory map below shows the estimated trajectory of the vehicle and the location of objects encountered during the mission. The colors are randomly

assigned based on semantics. Black lines represent correct matches, a red dashed line shows false positives due to an error from feature embedding, and

a cyan dashed line is a misaligned object match in the map due to an error from range values from the sonar sensor.

News

Abstract

Despite recent advances in semantic Simultaneous Localization and Mapping (SLAM) for terrestrial and aerial applications, underwater semantic SLAM remains an open and largely unaddressed research problem due to the unique sensing modalities and the differing object classes. This paper presents a semantic SLAM method for underwater environments that can identify, localize, classify, and map a wide variety of marine objects without a priori knowledge of the scene’s object makeup. The method performs unsupervised object segmentation and object-level feature aggregation, and then uses opti-acoustic sensor fusion for object localization with probabilistic data association and graphical models for back- end inference. Indoor and outdoor underwater datasets with a wide variety of objects and challenging acoustic and lighting conditions are collected for evaluation. The datasets are made publicly available. Quantitative and qualitative results show the proposed method achieves reduced trajectory error compared to baseline methods, and is also able to obtain comparable map accuracy to a baseline closed-set method that requires hand- labeled data of all objects in the scene.

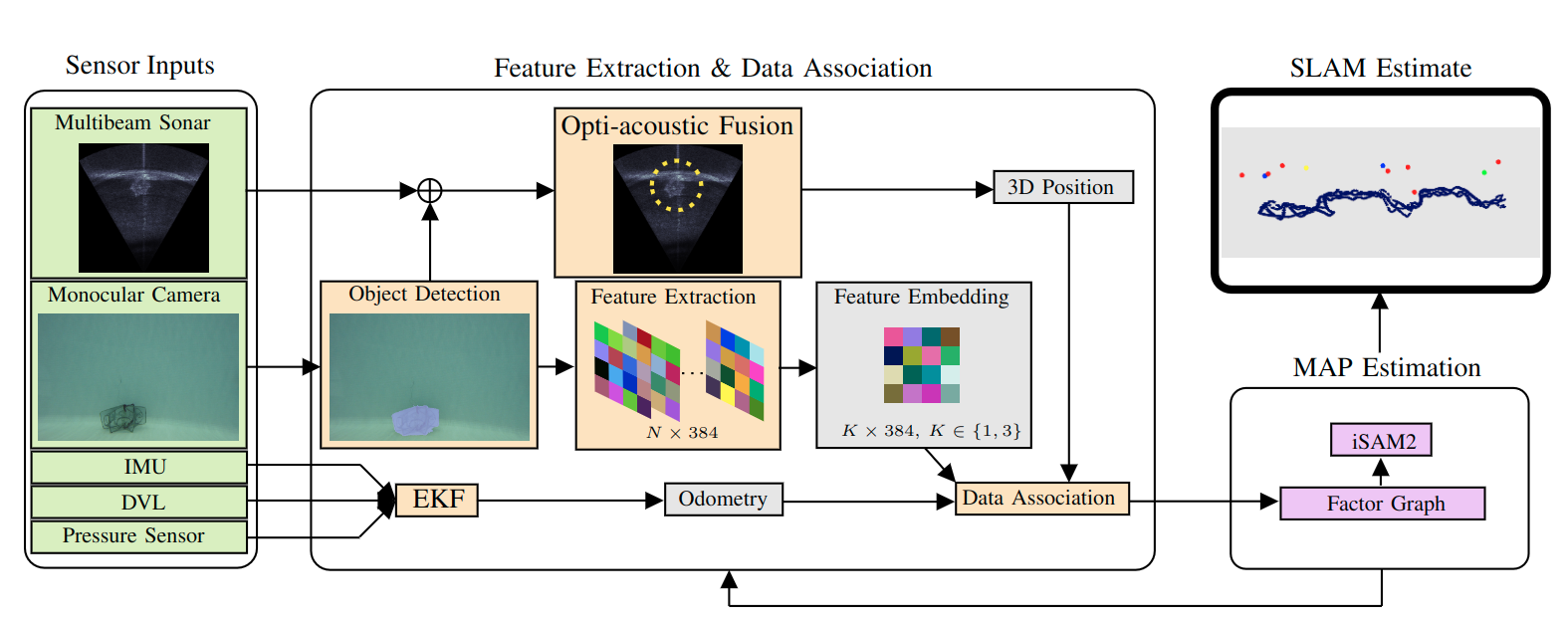

Method

The pipeline receives sensor inputs from a multibeam sonar, a monocular camera, an IMU, a DVL, and a pressure sensor. (1) Segment objects from

optical images and extract features for each segmentation mask. Features are projected into a fixed dimension for the data association process. (2) Given

the segmentation mask, its pixel centroid is used to find a corresponding range from sonar returns and estimate a 3D position of the object. (3) In parallel,

sensor readings from IMU, DVL, and pressure sensor are used to estimate odometry. Outputs from (1)-(3) are used to build a factor graph and optimize

it via iSAM2 to obtain map and trajectory estimates.

Paper

Opti-Acoustic Semantic SLAM with Unknown Objects in Underwater Environments

Kurran Singh and Jungseok Hong and Nicholas R. Rypkema and John J. Leonard